Table of Contents

Reviewers also mentioned it helps uncover customer behavior trends, which makes sense given how connected the platform is across channels.

That said, not everything is perfect. I saw a few users mentioning that the platform can feel laggy at times, particularly when working with large datasets or switching between modules. There were also a couple of mentions about reporting being harder to navigate, especially when tracking engagement across multiple channels.

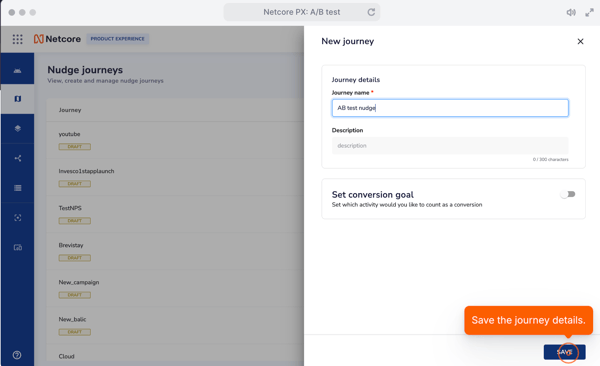

Overall though, Netcore comes across as a strong contender for teams that want to move quickly and test messaging at scale. It’s built with customer engagement in mind, in my opinion and it feels like a good fit for CRM teams, growth marketers, and product-led organizations looking to optimize experiences without needing to build custom testing flows from scratch.

If I were running in-app experiments or nudges across channels, I’d seriously consider giving this one a go.

What I like about Netcore Customer Engagement and Experience Platform:

- I really like how A/B testing is integrated directly into the journey builder. From what I’ve seen, it’s easy to drop in a test node and experiment with different content across multiple channels.

- Reviewers consistently mention that the platform is user-friendly when it comes to launching campaigns. That kind of simplicity is a huge plus when you’re juggling multiple experiments.

What G2 users like about Netcore Customer Engagement and Experience Platform:

“Netcore Cloud has maximized our productivity on every front. Leveraging it’s email and AMP, we’ve streamlined communication channels seamlessly. The email AMP and A/B testing tools have facilitated precise targeting and optimization. They have provided deep insights, guiding our decision-making process effectively. Netcore Cloud is a game-changer for businesses striving for efficiency and effectiveness in digital engagement.”

– Netcore Customer Engagement and Experience Platform Review, Surbhi A, Senior Executive, Digital Marketing.

What I dislike about Netcore Customer Engagement and Experience Platform:

- Some users pointed out that parts of the platform can feel a bit laggy, especially when handling large data sets. I’d probably find that frustrating if I were working under tight deadlines.

- I also noticed a few users mentioning that there’s a bit of a learning curve. That’s pretty common with platforms like this, but it’s still something I’d keep in mind going in.

What G2 users dislike about Netcore Customer Engagement and Experience Platform:

“The thing I dislike about Netcore is that it’s a bit difficult to understand at first. And there is no user guide video. If we want to create or use any analytics, we need to connect with the POC. If there are any tutorial videos, it will be better for new users.”

– Netcore Customer Engagement and Experience Platform Review, Ganavi K, Online Marketing Executive.

5. LaunchDarkly

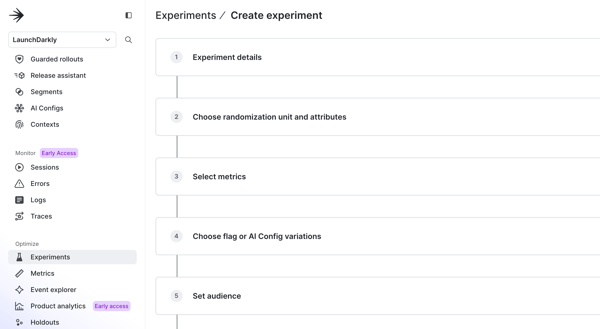

I’ve known LaunchDarkly primarily as a feature management and feature flagging platform, not your typical A/B testing tool. But after looking into it more closely and digging through user reviews, it’s clear that it plays a valuable role in experimentation, just in a different way than most visual or marketer-friendly platforms.

It’s geared more toward development and product teams who want to test, release, and roll back features without redeploying code.

What stood out most to me from the reviews is how widely appreciated LaunchDarkly is for helping teams safely ship new features and run backend experiments.

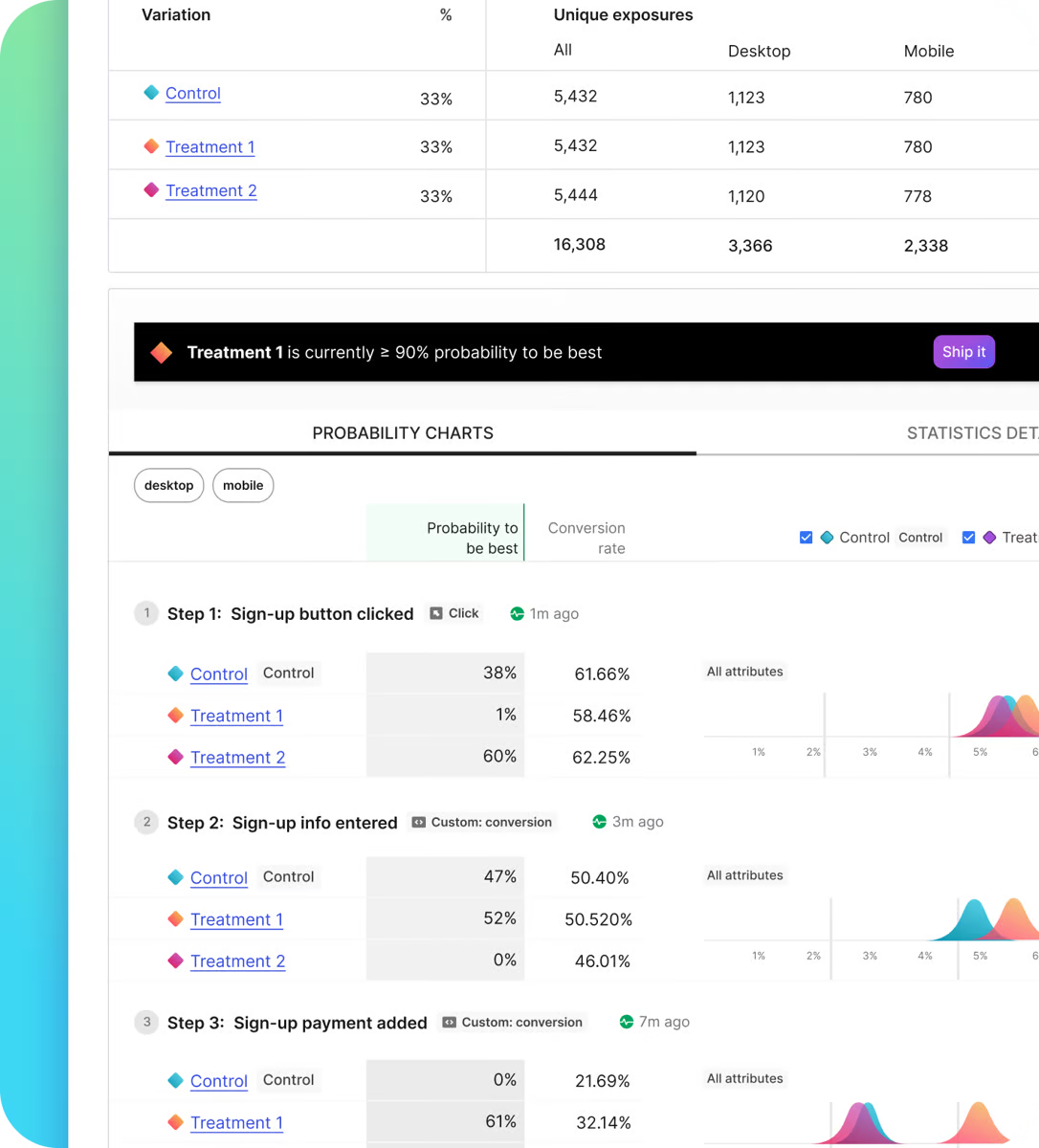

I could see reviewers consistently talking about using feature flags to control releases and run A/B tests by segmenting users or gradually rolling out new functionality. The platform gives teams control over who sees what and when, which makes it ideal for testing changes in real production environments without risking a full release.

The big win here seems to be around flexibility and safety. One user described it as their go-to for selective releases and targeted rollouts. Another mentioned that it helps them run experiments at the infrastructure level, where performance, functionality, or user experience might vary. For me, this means we’re not dragging and dropping elements on a landing page. We’re flipping switches under the hood with precision.

But, a few reviewers did mention some friction. The interface can get a little overwhelming when you’re managing a large number of feature flags, especially across different teams or environments. Some users also noted that the UI update rolled out last year took some time to adjust to. It wasn’t a dealbreaker, but for teams juggling a complex flag strategy, it might take a bit of upfront effort to get fully comfortable.

Overall, LaunchDarkly feels like the right fit for dev-heavy teams that treat experimentation as part of the release pipeline, in my opinion.

If you’re a product manager, engineer, or growth team working closely with code, it’s a powerful way to run safe, targeted experiments. Just know that it’s not designed for quick visual tweaks or content-based split tests. It’s more about managing risk and testing logic at scale.

What I like about LaunchDarkly:

- I really like how LaunchDarkly gives you precise control over who sees what in production. Using feature flags to roll out or roll back changes without redeploying code feels like a superpower for dev teams.

- Reviewers kept highlighting how useful the platform is for targeted experiments and safe testing. For me, it’s a great way to validate changes gradually, especially when you’re dealing with high-stakes releases.

What G2 users like about LaunchDarkly:

“I like LaunchDarkly’s ability to seamlessly manage feature flags and control rollouts, enabling quick, safe deployments and A/B testing without requiring code changes or restarts. Its real-time toggling, comprehensive analytics, and role-based access controls make it invaluable for dynamic feature management and reducing risk during releases.“

– LaunchDarkly Review, Togi Kiran, Senior Software Development Engineer.

What I dislike about LaunchDarkly:

- Managing a large number of feature flags can feel messy at times. I saw a few users mention that it gets confusing to track everything when you’re working across multiple projects or environments.

- The UI redesign from last year came up a few times. Some folks said it took a while to get used to, especially if you were already familiar with the older layout. It sounds like a small adjustment, but still something I’d want to be aware of.

What G2 users dislike about LaunchDarkly:

“”The new UI that LaunchDarkly recently launched for feature flagging is not something that I like, I had built muscle memory for using the tool hence it has become a little tough for me to use it.”

– LaunchDarkly Review, Aravind K, Senior Software Engineer.

6. AppMetrica

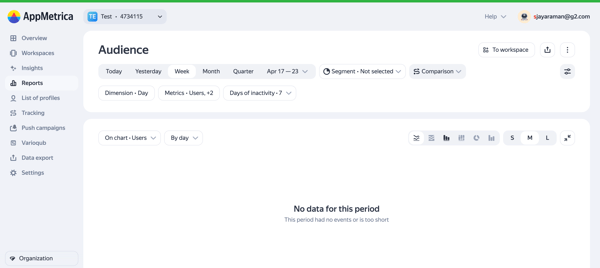

I’ve always thought of AppMetrica as more of a mobile analytics and attribution tool, but after reading through reviews and looking deeper into what it offers, I realized it’s actually capable of much more.

From what I could gather, it’s not a visual A/B testing platform in the traditional sense, but for data-driven product teams, it offers the kind of backend insight you need to understand what’s working and why.

What stood out most to me from user reviews is the ease of integration, the detailed technical documentation, and the ability to slice data quickly with flexible filters.

The A/B testing features are praised for being straightforward, especially when testing mobile app flows or messaging variants. A few users highlighted how valuable it is for monitoring errors, tracking installs, and analyzing in-app events in real time, which makes experimentation feel much more responsive and actionable.

Of course, no tool is perfect. I saw some reviewers note limitations, like inadequate reporting functionalities. A couple of developers mentioned that the UI can be slightly clunky at times, especially when working with larger datasets or trying to drill down into specific performance metrics. These aren’t huge issues, but I’d definitely want to keep en eye out for.

If I were running growth experiments or behavioral testing within a mobile app, AppMetrica would definitely be on my radar. It’s especially useful for product teams and mobile marketers who care more about what happens under the hood than flashy UI tools

What I like about AppMetrica:

- I really like how easy it is to filter and break down mobile app data in AppMetrica. Reviewers mentioned the segmentation and real-time analytics are incredibly helpful for spotting trends quickly.

- From what I gather, it is to get started and start tracking meaningful in-app activity right away.

What G2 users like about AppMetrica:

“I personally like the ease of filtration and A/B test functionality, which are crucial in comparison of different user behaviour while implementing new features. I use it daily for error analytics and usage statistics. It was easy to implement and integrate to our daily work. Customer support is reliable and react to questions fast.”

– AppMetrica Review, Anna L, Senior Product Manager.

What I dislike about AppMetrica:

- I’ve noticed that users often call out limited reporting functionalities, which could be a challenge for certain use cases.

- Some reviewers mentioned that the interface can feel a little clunky when you’re working with a lot of data or trying to dig into deeper metrics. I could see that slowing things down.

What G2 users dislike about AppMetrica:

“Being a developer and App metrica provides limited customization in reports and also in ad tracking limitations.”

– AppMetrica Review, Mani M, Developer.

7. MoEngage

I’ve always seen MoEngage as one of those solid, go-to platforms when it comes to customer engagement and automation, especially if you’re looking to work across channels like push, email, in-app, and SMS. It’s designed for scale, and it shows.

You get real-time analytics, user segmentation, campaign automation, and the ability to run A/B tests across your entire funnel. What stood out to me is how often reviewers mention how intuitive the platform feels once you’re inside. A lot of people talked about how easy it is to create and segment campaigns, even for more complex workflows, and how quickly they were able to push live experiments with personalized variants.

MoEngage’s A/B testing features really come to life when paired with its segmentation tools, from what I gathered. The platform gives you fast feedback on what’s working.

Reviewers highlighted how useful it is to test across multiple audience segments without having to set up separate campaigns. And since everything sits on top of a unified customer view, the test results feel tightly connected to actual behavior, not just vanity metrics.

That said, a few reviewers did mention that it can be a little tricky to implement at first, especially if you’re comparing it to lighter tools. One user also mentioned pricing being a bit on the higher side, which tracks with the level of capability you’re getting. But overall, complaints were rare and I’d say things to be aware of rather than major red flags.

If I were on a CRM or lifecycle marketing team looking to run cross-channel experiments with deep behavioral insights, MoEngage would absolutely be on my shortlist.

What I like about MoEngage:

- I really like how easy it is to build and segment campaigns in MoEngage. Reviewers kept mentioning how intuitive the platform feels, even when you’re setting up complex automation or multichannel journeys.

- The A/B testing features seem super practical, especially with how seamlessly they tie into audience segmentation. I like that I could test different versions without needing to duplicate entire campaigns.

What G2 users like about MoEngage:

“I love that LogicGate is incredibly customizable to meet your organization’s specific needs; however, there are also templates and applications to get you started if you aren’t sure how to proceed.”

– MoEngage Review, Ashleigh G.

What I dislike about MoEngage:

- A few users mentioned that the initial setup can be a bit tricky. I get the sense that while the platform is powerful, it might take some time to get comfortable with everything if you’re new to it.

- There were also some comments about pricing being a little steep. It’s something I’d definitely want to factor in before going all in.

What G2 users dislike about MoEngage:

“While MoEngage is a powerful platform, there are a few areas that could use improvement. The platform’s reporting capabilities, although robust, could benefit from more customization options to better tailor insights to our specific needs. Occasionally, the system can be a bit slow when handling large datasets, which can be frustrating when working under tight deadlines.

Additionally, while the platform is generally intuitive, there is a slight learning curve for new users when navigating more advanced features. However, these issues are relatively minor compared to the overall benefits the platform provides.“

– MoEngage Review, Nabeel J, Client Engagement Manager.

Frequently asked questions (FAQ) on A/B testing tools

1. What is A/B testing and why does it matter?

A/B testing is the process of comparing two or more variations of a message, layout, or experience to see which one performs better. It’s how you replace guesswork with real data, whether you’re optimizing a subject line, a product page, or a user journey. For marketers, product teams, and growth leads, it’s one of the most effective ways to learn what actually works.

2. Which A/B testing tool is the best?

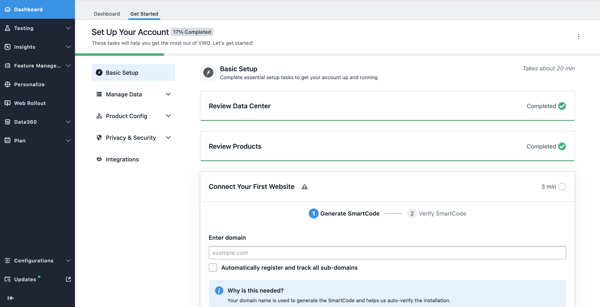

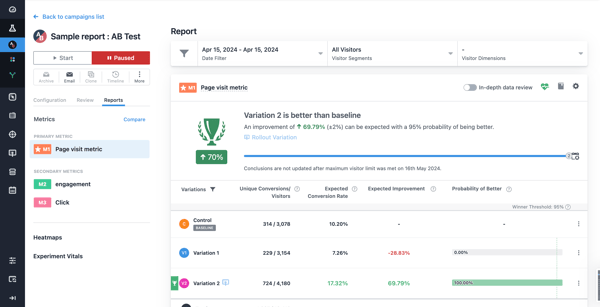

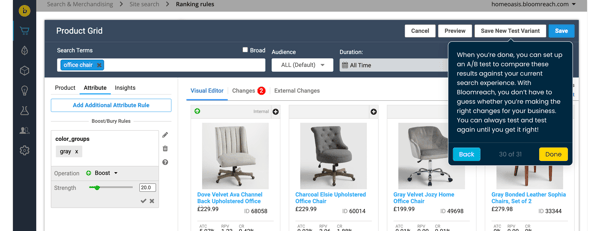

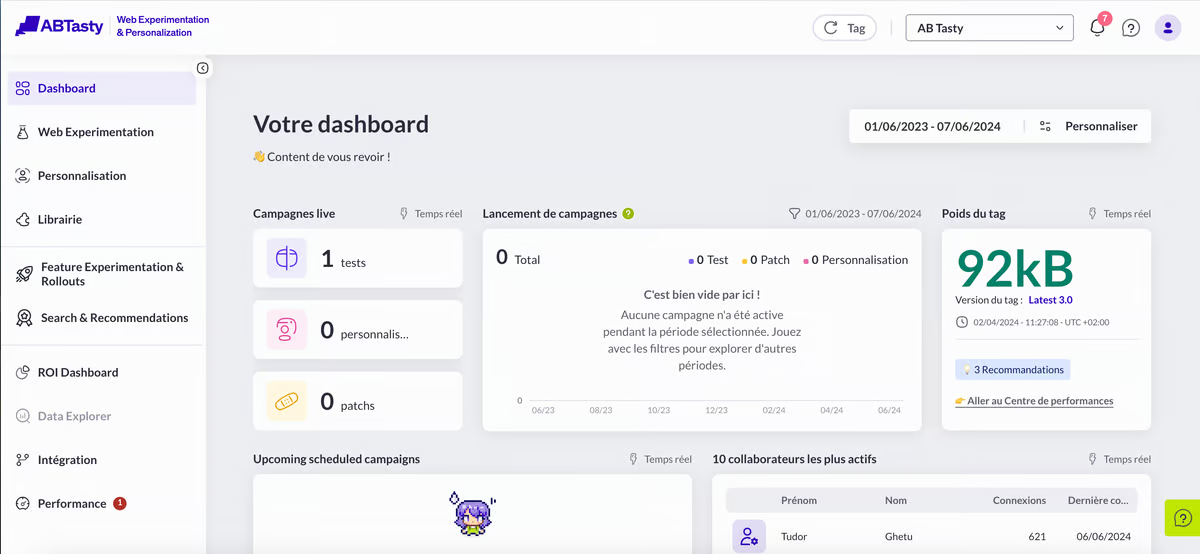

There’s no single “best” tool. It really depends on your team, goals, and how technical you want to get. For example, tools like VWO and AB Tasty are great for marketers who want visual testing options. LaunchDarkly is a better fit for developers and product teams testing feature flags. Platforms like MoEngage, Netcore, and AppMetrica shine when A/B testing is part of broader customer engagement or app analytics strategies.

3. Can I run A/B tests without a developer?

Yes, many tools offer no-code or low-code setups, especially those built for marketing or growth teams. Platforms like AB Tasty, VWO, and MoEngage make it possible to create and launch tests directly from their dashboards. That said, some tools, especially those focused on backend or feature-level testing, may require developer involvement.

4. Are free A/B testing tools worth trying?

Yes, if you’re just getting started or working with a small team, free A/B testing tools can be a great way to dip your toes in. They often come with limitations—like caps on traffic volume or fewer targeting options—but they’re still useful for testing headlines, CTAs, or basic campaign flows. Just keep in mind that as your experimentation strategy matures, you’ll probably outgrow them pretty quickly.

5. How do I choose the right A/B testing tool?

Start with your use case. If you’re testing marketing messages or landing pages, tools like VWO, AB Tasty, or MoEngage might be ideal. For app analytics and user behavior tracking, AppMetrica could make more sense. If you’re on the product or dev side, LaunchDarkly or Netcore offer deeper control through feature flags and server-side experiments. Prioritize tools that fit how your team works, not just what looks shiny on the surface.

6. How do I choose the right A/B testing tool?

Start by figuring out what kind of experiments you’re running and who’s going to run them. If you’re testing web pages, CTAs, or content with minimal dev involvement, tools like VWO, AB Tasty, Optimizely, or Google Optimize (for simple setups, if still available) are popular picks.

If you’re focused on mobile engagement or multichannel journeys, like testing push notifications, email, or in-app flows, look at platforms like MoEngage, Netcore, or CleverTap.

For product and engineering teams testing feature rollouts or backend logic, LaunchDarkly, Split, or Flagsmith are better fits with strong feature flagging and targeting capabilities. Also consider how well the tool integrates with your current stack, your team’s technical comfort level, and whether you’ll need advanced segmentation, personalization, or statistical modeling out of the box.

The right tool is the one that fits your goals without adding unnecessary complexity.

Split tests made simple

If there’s one thing I’ve learned while diving into A/B testing tools, it’s that experimentation isn’t just a feature, it’s a mindset. Whether you’re optimizing landing pages, rolling out new features, or fine-tuning omnichannel campaigns, having the right testing platform in place gives you the power to validate what works before making it permanent. And that peace of mind? It’s invaluable.

Another big takeaway? Your choice of tool should reflect the team running the test. A great feature set doesn’t matter if it’s too complex for the people using it. If your marketers are hands-on, they’ll need clean UIs, drag-and-drop journeys, and fast reporting. If your engineers are doing the testing, they’ll care more about API access, flag control, and integration with CI/CD pipelines. Choosing the right tool means choosing the right workflow.

At the end of the day, A/B testing isn’t about proving who had the better idea. It’s about building better experiences with less risk. And when you pair the right tool with the right team, that’s when experimentation stops feeling like guesswork and starts driving real growth.

Need more help? Explore the best conversion rate optimization tools on G2 to find the right platform for your goals.