Table of Contents

In this article, you will learn seven practical, production-grade considerations that determine whether agentic AI delivers business value or becomes an expensive experiment.

Topics we will cover include:

- How token economics change dramatically from pilot to production.

- Why non-determinism complicates debugging, evaluation, and multi-agent orchestration.

- What it really takes to integrate agents with enterprise systems and long-term memory safely.

Without further delay, let’s begin.

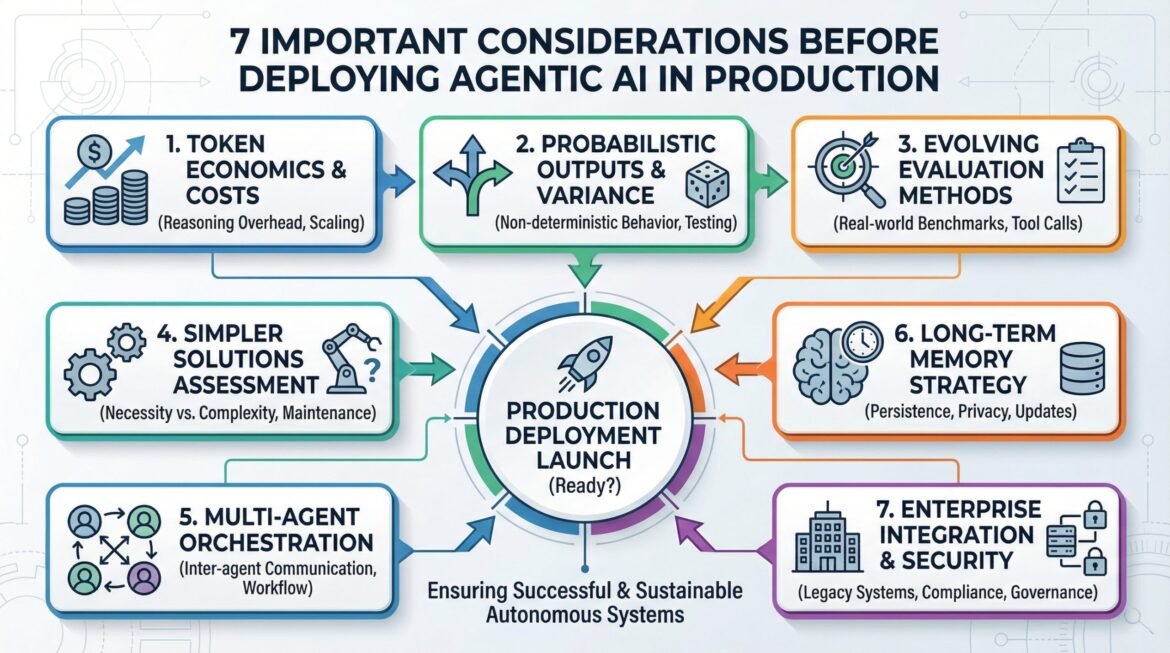

7 Important Considerations Before Deploying Agentic AI in Production

Image by Author (Click to enlarge)

Introduction

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention. However, Gartner predicts that over 40% of agentic AI projects will be canceled by the end of 2027, citing “escalating costs, unclear business value or inadequate risk controls.”

Understanding these seven considerations can help you avoid becoming part of that statistic. If you’re new to agentic AI, The Roadmap for Mastering Agentic AI in 2026 provides essential foundational knowledge.

1. Understanding Token Economics in Production

During pilot testing, token costs seem manageable. Production is different. Claude Sonnet 4.5 costs \$3 per million input tokens and \$15 per million output tokens, while extended reasoning can multiply these costs significantly.

Consider a customer service agent processing 10,000 queries daily. If each query uses 5,000 tokens (roughly 3,750 words), that’s 50 million tokens daily, or $150/day for input tokens. But this simplified calculation misses the reality of agentic systems.

Agents don’t just read and respond. They reason, plan, and iterate. A single user query triggers an internal loop: the agent reads the question, searches a knowledge base, evaluates results, formulates a response, validates it against company policies, and potentially revises it. Each step consumes tokens. What appears as one 5,000-token interaction might actually consume 15,000-20,000 tokens when you count the agent’s internal reasoning.

Now the math changes. If each user query triggers 4x the visible token count through reasoning overhead, you’re looking at 200 million tokens daily. That’s \$600/day for input tokens alone. Add output tokens (typically 20-30% of the total), and you’re at \$750-900/day. Scale that across a year, and a single use case runs \$270,000-330,000 annually.

Multi-agent systems intensify this challenge. Three agents collaborating don’t just triple the cost. They create exponential token usage through inter-agent communication. A workflow requiring five agents to coordinate might involve dozens of inter-agent messages before producing a final result.

Choosing the right model for each agent’s specific task becomes essential for controlling costs.

2. Embracing Probabilistic Outputs

Traditional software is deterministic: same input, same output every time. LLMs don’t work this way. Even with temperature set to 0, LLMs exhibit non-deterministic behavior due to floating-point arithmetic variations in GPU computations.

Research shows accuracy can vary up to 15% across runs with the same deterministic settings, with the gap between best and worst possible performance reaching 70%. This isn’t a bug. It’s how these models work.

For production systems, debugging becomes significantly harder when you can’t reliably reproduce an error. A customer complaint about an incorrect agent response might produce the correct response when you test it. Regulated industries like healthcare and finance face difficulties here, as they often require audit trails showing consistent decision-making processes.

The solution isn’t trying to force determinism. Instead, build testing infrastructure that accounts for variability. Tools like Promptfoo, LangSmith, and Arize Phoenix let you run evaluations across hundreds or thousands of runs. Rather than testing a prompt once, you run it 500 times and measure the distribution of outcomes. This reveals the variance and helps you understand the range of possible behaviors.

3. Evaluation Methods Are Still Evolving

Agentic AI systems excel on laboratory benchmarks but production is messy. Real users ask ambiguous questions, provide incomplete context, and have unstated assumptions. The evaluation infrastructure to measure agent performance in these conditions is still developing.

Beyond generating correct answers, production agents must execute correct actions. An agent might understand a user’s request perfectly but generate a malformed tool call that breaks the entire pipeline. Consider a customer service agent with access to a user management system. The agent correctly identifies that it needs to update a user’s subscription tier. But instead of calling update_subscription(user_id=12345, tier="premium"), it generates update_subscription(user_id="12345", tier=premium). The string/integer type mismatch causes an exception.

Research on structured output reliability shows that even frontier models fail to follow JSON schemas 5-10% of the time under complex scenarios. When an agent makes 50 tool calls per user interaction, that 5% failure rate becomes a significant operational issue.

Gartner notes that many agentic AI projects fail because “current models don’t have the maturity and agency to autonomously achieve complex business goals”. The gap between controlled evaluation and real-world performance often only becomes apparent after deployment.

4. Simpler Solutions Often Work Better

The flexibility of agentic AI creates temptation to use it everywhere. However, many use cases don’t require autonomous reasoning. They need reliable, predictable automation.

Gartner found that “many use cases positioned as agentic today don’t require agentic implementations”. Ask: Does the task require handling novel situations? Does it benefit from natural language understanding? If not, traditional automation will likely serve you better.

The decision becomes clearer when you consider maintenance burden. Traditional automation breaks in predictable ways. Agent failures are murkier. Why did the agent misinterpret this particular phrasing? The debugging process for probabilistic systems requires different skills and more time.

5. Multi-Agent Systems Require Significant Orchestration

Single agents are complex. Multi-agent systems are exponentially more so. What appeared as a simple customer question might trigger this internal workflow: Router Agent determines which specialist is needed, Order Lookup Agent queries the database, Shipping Agent checks tracking numbers, and Customer Service Agent synthesizes a response. Each handoff consumes tokens.

Router Agent to Order Lookup: 200 tokens. Order Lookup to Shipping Agent: 300 tokens. Shipping Agent to Customer Service Agent: 400 tokens. Back through the chain: 350 tokens. Final synthesis: 500 tokens. The internal conversation totaled 1,750 tokens before the user saw a response. Multiply this across thousands of interactions daily, and the agent-to-agent communication becomes a major cost center.

Research on non-deterministic LLM behavior shows even single-agent outputs vary run-to-run. When multiple agents communicate, this variability compounds. The same user question might trigger a three-agent workflow one time and a five-agent workflow the next.

6. Long-term Memory Adds Implementation Complexity

Giving agents ability to remember information across sessions introduces technical and operational challenges. Which information should be remembered? How long should it persist? What happens when remembered information becomes outdated?

The three types of long-term memory: episodic, semantic, and procedural each require different storage strategies and update policies.

Privacy and compliance add complexity. If your agent remembers customer information, GDPR’s right to be forgotten means you need mechanisms to selectively delete information. The technical architecture extends to vector databases, graph databases, and traditional databases. Each adds operational overhead and failure points.

Memory also introduces correctness challenges. If an agent remembers outdated preferences, it causes poor service. You need mechanisms to detect stale information and validate that remembered facts are still accurate.

7. Enterprise Integration Takes Time and Planning

The demo works beautifully. Then you try to deploy it in your enterprise environment. Your agent needs to authenticate with 15 different internal systems, each with its own security model. IT security requires a full audit. Compliance wants documentation. Legal needs to review data handling.

Legacy system integration presents challenges. Your agent might need to interact with systems that don’t have modern APIs or extract data from PDFs generated by decades-old reporting systems. Many enterprise systems weren’t designed with AI agent access in mind.

The tool-calling risks become especially problematic here. When your agent calls internal APIs, malformed requests might trigger alerts, consume rate limit quotas, or corrupt data. Building proper schema validation for all internal tool calls becomes essential.

Governance frameworks for agentic AI are still emerging. Who approves agent decisions? How do you audit agent actions? What happens when an agent makes a mistake?

Moving Forward Thoughtfully

These considerations aren’t meant to discourage agentic AI deployment. They’re meant to ensure successful deployments. Organizations that acknowledge these realities upfront are far more likely to succeed.

The key is matching organizational readiness to complexity. Start with well-defined use cases that have clear value propositions. Build incrementally, validating each capability before adding the next. Invest in observability from day one. And be honest about whether your use case truly requires an agent.

The future of agentic AI is promising, but getting there successfully requires clear assessment of both opportunities and challenges.