Table of Contents

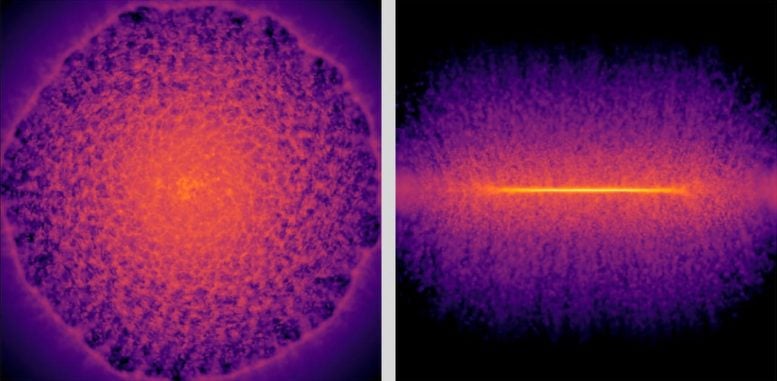

A research team in Japan has created a groundbreaking Milky Way simulation that follows more than 100 billion stars with a level of detail that was once thought impossible.

By training AI on how supernova explosions evolve and combining that knowledge with large-scale physics models, they produced a galaxy-scale simulation over 100 times faster than previous attempts.

AI-Powered Leap in Milky Way Modeling

Researchers led by Keiya Hirashima at the RIKEN Center for Interdisciplinary Theoretical and Mathematical Sciences (iTHEMS) in Japan, working with collaborators from The University of Tokyo and Universitat de Barcelona in Spain, have created the first Milky Way simulation capable of tracking more than 100 billion individual stars over a span of 10 thousand years. They achieved this by combining artificial intelligence (AI) with advanced numerical methods. The result includes 100 times more individual stars than previous top-tier models and was completed more than 100 times faster.

The work, presented at the international supercomputing conference SC ’25, represents a major advance for astrophysics, high-performance computing, and AI-driven modeling. This technique also has potential applications beyond space science, including simulations used to study climate patterns and weather behavior.

Astrophysicists have long aimed to build a Milky Way model detailed enough to follow each star on its own path. Such a tool would help test theories about how galaxies form, how they change shape over time, and how stars evolve, all while comparing the results to real astronomical data. Creating a model like this is extremely challenging because it has to account for gravity, fluid motion, the effects of supernova explosions, and the processes that generate new elements. Each of these unfolds on very different time and size scales, which makes the calculations complex.

Why Simulating Every Star Is So Hard

Up to now, no team has been able to simulate a galaxy the size of the Milky Way while still preserving the detail needed to follow individual stars. The most advanced earlier simulations were limited to about one billion solar masses, far below the more than 100 billion stars in our galaxy.

As a result, the smallest “particle” in those models represents a cluster of about 100 stars rather than a single star. This averages out important small-scale behavior, so only large-scale galactic features can be modeled with accuracy. The core difficulty lies in the time interval between each step in the simulation — rapid changes involving individual stars, such as the development of a supernova, can only be captured if the simulation advances in sufficiently small increments.

The Computational Wall: Limits of Traditional Supercomputing

Reducing the timestep greatly increases the computational load. Even the strongest conventional physics-based simulation available today would need 315 hours to calculate just 1 million years of Milky Way evolution if it were tracking individual stars. At that pace, simulating 1 billion years would take more than 36 years of real time. Adding more supercomputer cores is not a practical workaround, since they consume enormous amounts of energy and do not scale efficiently as more are added.

In response to these limitations, Hirashima and the research team designed a new strategy that blends a deep learning surrogate model with standard physical simulation. The surrogate component was trained using high-resolution simulations of a supernova and learned how gas expands during the 100,000 years following the explosion, all without drawing on the full resources of the main model. This AI-driven shortcut allows the simulation to capture both the broad motion of the galaxy and the detailed behavior of events such as supernovae.

The researchers confirmed the accuracy of their results by comparing them with large-scale tests run on RIKEN’s Fugaku supercomputer and The University of Tokyo’s Miyabi Supercomputer System.

Deep Learning Breakthrough for Supernova-Driven Dynamics

Not only does the method allow individual star resolution in large galaxies with over 100 billion stars, but simulating 1 million years only took 2.78 hours. This means that the desired 1 billion years could be simulated in a mere 115 days, not 36 years.

Beyond astrophysics, this approach could transform other multi-scale simulations—such as those in weather, ocean, and climate science—in which simulations need to link both small-scale and large-scale processes.

A New Era for Galaxy Evolution and Climate-Scale Simulations

“I believe that integrating AI with high-performance computing marks a fundamental shift in how we tackle multi-scale, multi-physics problems across the computational sciences,” says Hirashima. “This achievement also shows that AI-accelerated simulations can move beyond pattern recognition to become a genuine tool for scientific discovery—helping us trace how the elements that formed life itself emerged within our galaxy.”

Reference: “The First Star-by-star $N$-body/Hydrodynamics Simulation of Our Galaxy Coupling with a Surrogate Model” by Keiya Hirashima, Michiko S Fujii, Takayuki R Saitoh, Naoto Harada, Kentaro Nomura, Kohji Yoshikawa, Yutaka Hirai, Tetsuro Asano, Kana Moriwaki, Masaki Iwasawa, Takashi Okamoto and Junichiro Makino, 15 November 2025, SC ’25: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis.

DOI: 10.1145/3712285.3759866

Never miss a breakthrough: Join the SciTechDaily newsletter.

Follow us on Google, Discover, and News.